Photonic Systems Integration

Laboratory

Panoramic Light Field Camera

Our goal is to combine the high numerical aperture wide-field imaging capabilities of monocentric lenses with the depth sensing and refocusing capacity of computational light field imaging to create a compact panoramic depth sensing camera. ‘Monocentric’ lenses, made from concentric spheres of glass, enable high FOV and low F/# imaging with diffraction limited resolution. These lenses are significantly more compact and less complex than comparable fish-eye lenses, but form their image on a spherical surface.

There has been significant effort dedicated to capturing such spherical images with conventional flat CMOS image sensors. Previous work has explored using an array of secondary imagers to relay overlapping regions of the image onto conventional image sensors or coupling the spherical image to one or more CMOS sensors via straight or curved imaging fiber bundles. Direct capture of the spherical image surface may become practical as the development of spherically curved CMOS sensors continues.

Here we explore a different approach. First, we move the challenge of focusing onto a curved image surface from the physical to the computational domain using LF imaging and space-variant refocusing to correct image curvature of a local region. Second, we use a faceted refractive ‘field consolidator’ lens to divide a continuous wide FOV curved image onto an array of conventionally packaged CMOS sensors. After demonstrating this prototype system, we present a full circular 155° field lens design compatible with light field sensing direct 164 MPixel capture of 13 spherical sub-images, fitting within a one inch diameter sphere.

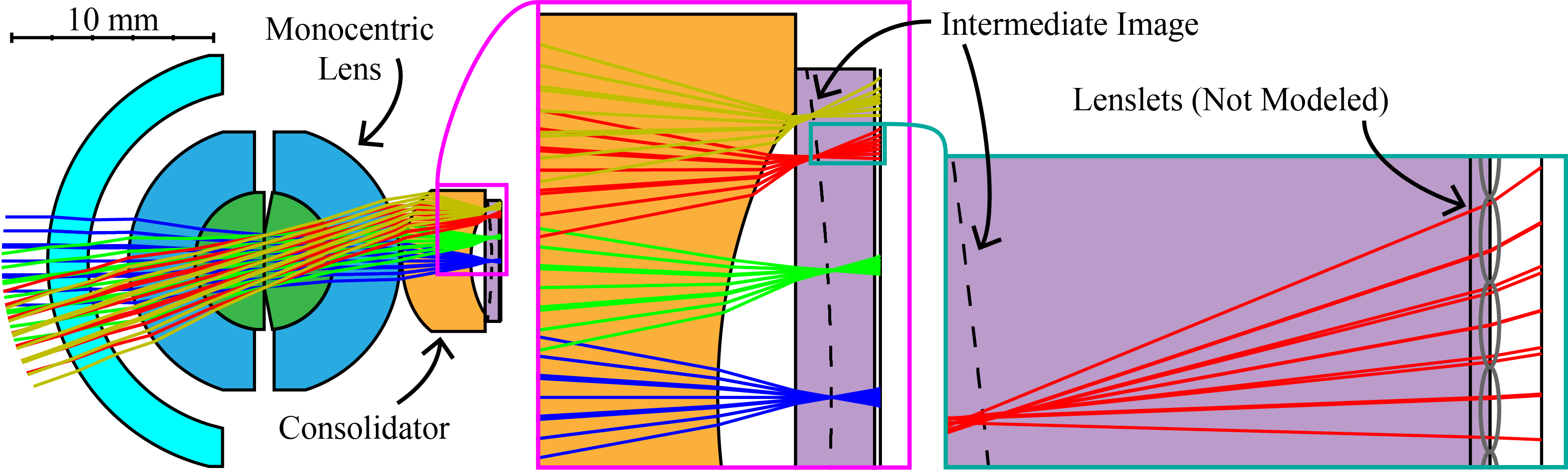

Sequential ray trace diagram of the monocentric lens and single consolidator. The largest (gold) and second largest (red) fields correspond to the diagonal and horizontal extremes of the sensor respectively. The lenslets are not modeled in the sequential design, but their position is shown in the inset.

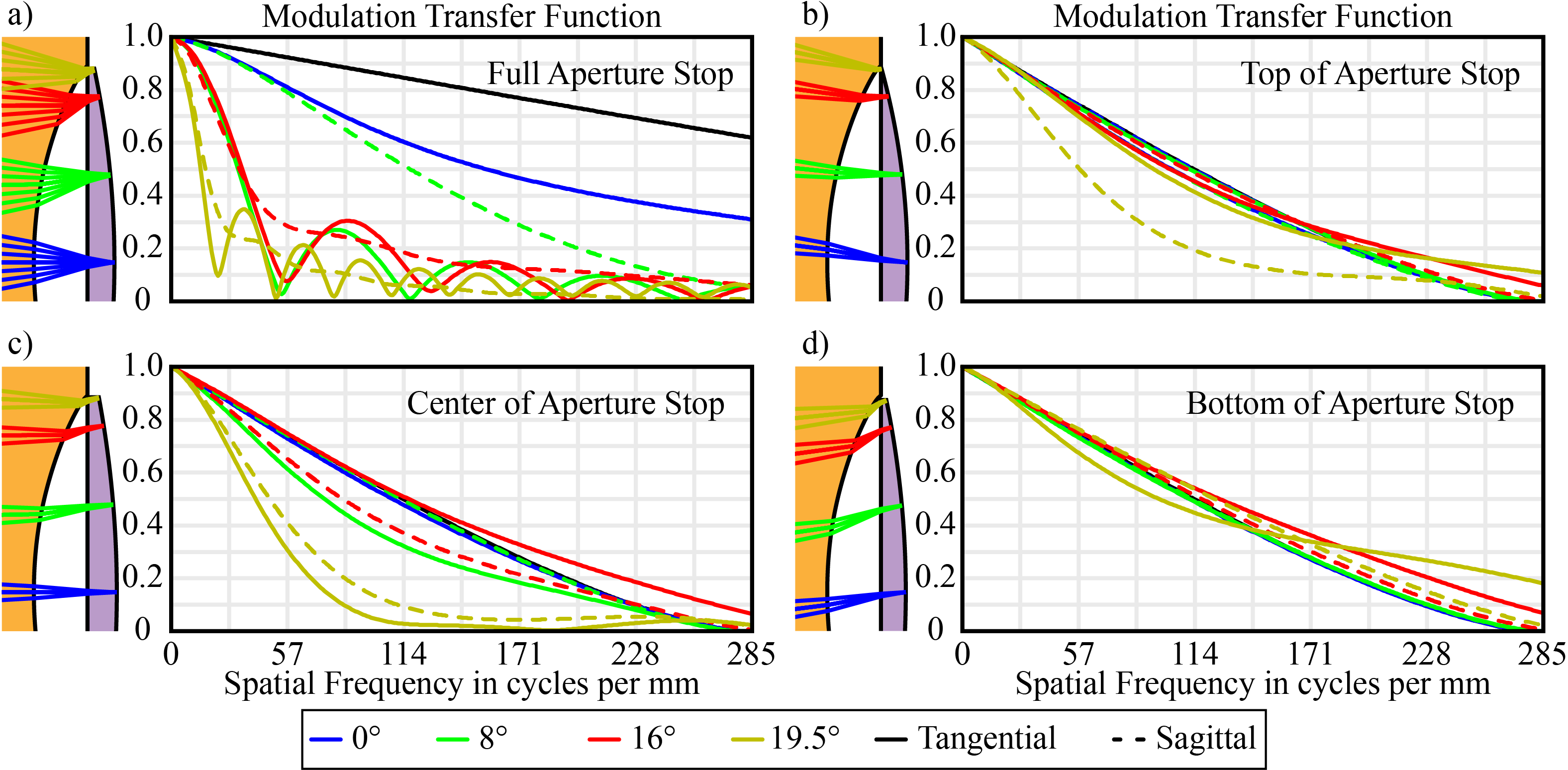

Monochromatic MTF plots of the sequential design at the spherical intermediate sub-image of light passing through the full (a), top (b), center (c), and bottom (d) regions of the aperture stop. The poor performance seen in the full aperture (a), which is equivalent to direct image capture, is improved by aperture division by the LF relay (b through d).

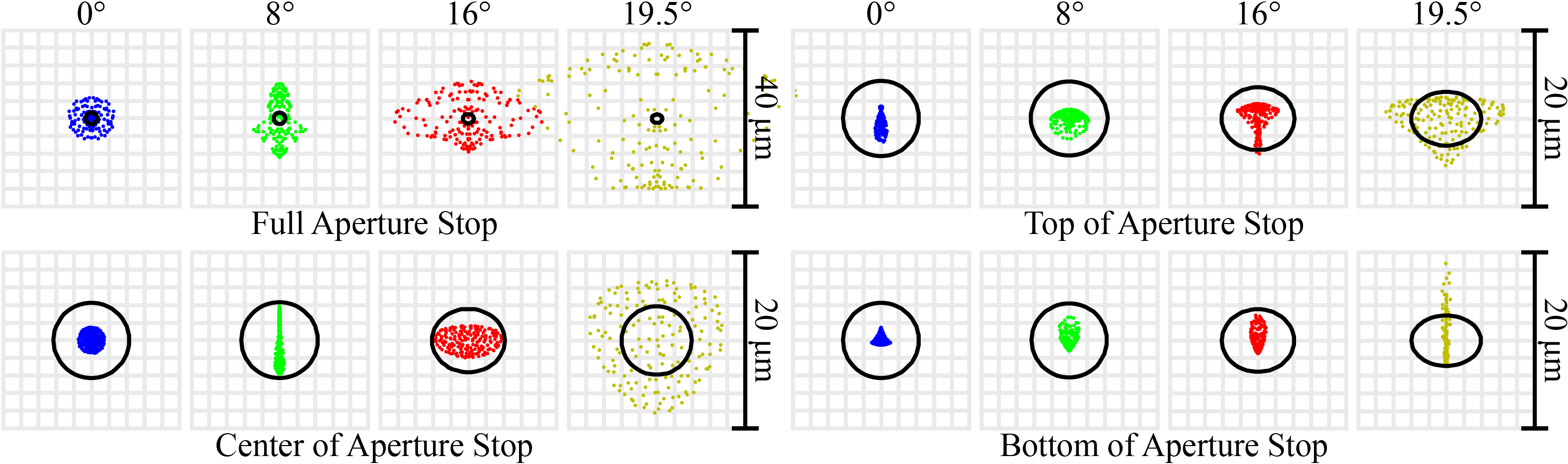

Monochromatic geometrical spot diagrams for the sequential design at the intermediate image of light passing through the full, top, center, and bottom regions of the aperture stop.

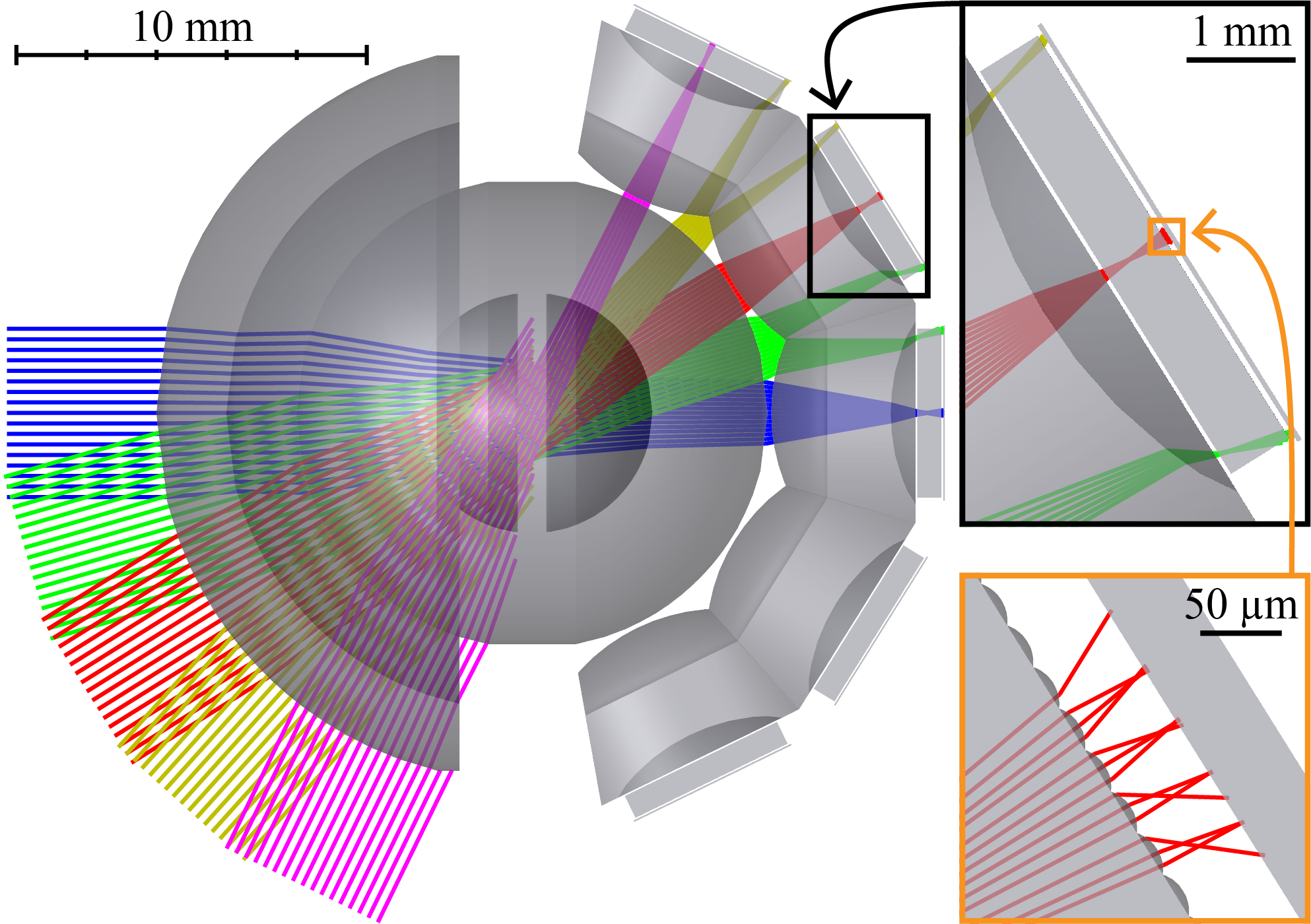

Top down non-sequential ray trace diagram of five horizontal fields at infinite conjugates.

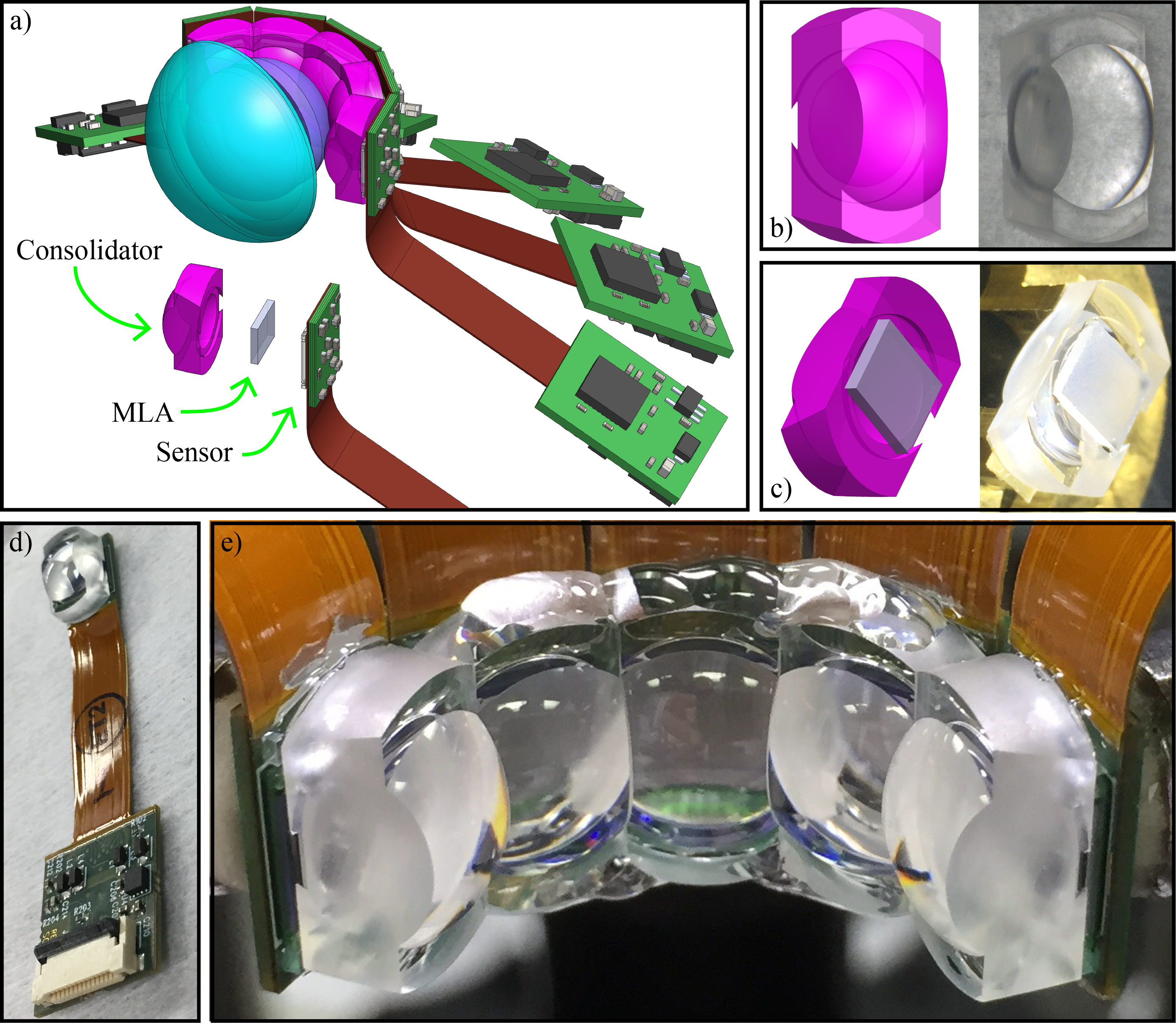

(a) CAD assembly of the five sensor array behind a monocentric lens with an exploded sensor stack to the lower left. (b) CAD and fabricated consolidator after wedge cut and with microlens array attached (c). Final assembled consolidating LF image sensor on flex (g) and after assembly into the full panoramic array (e).

Photo of the lab scene taken with a fish-eye lens (left), F/2.5 three sensor camera (top right), and F/4 five sensor camera (bottom right).

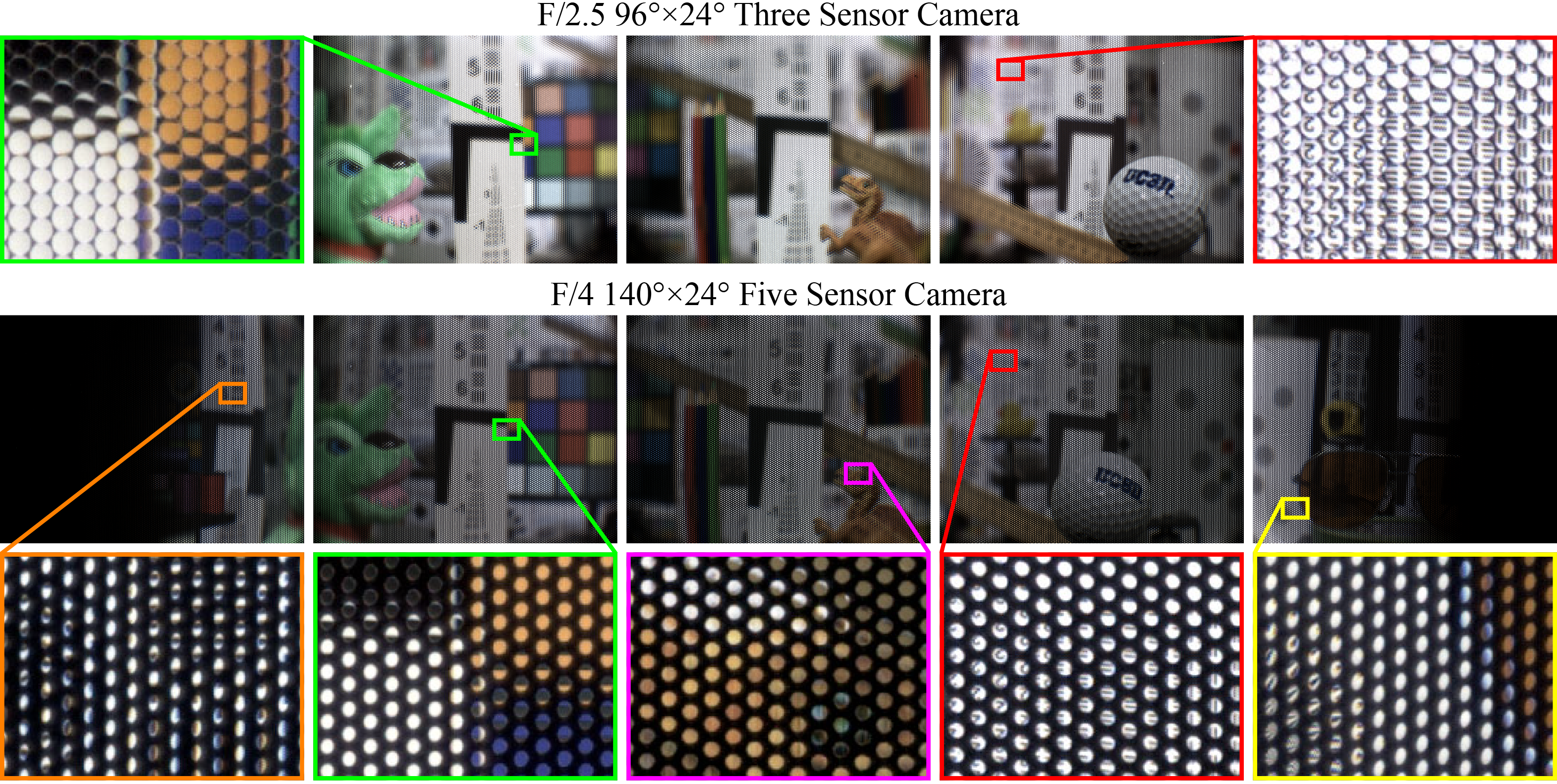

RAW LF data (after demosaicing) of the lab scene for the F/2.5 three sensor camera (top) and F/4 five sensor camera (bottom) with zoomed insets showing the difference between balanced under and over-filling and under-filling for the F/2.5 and F/4 lenses respectively.

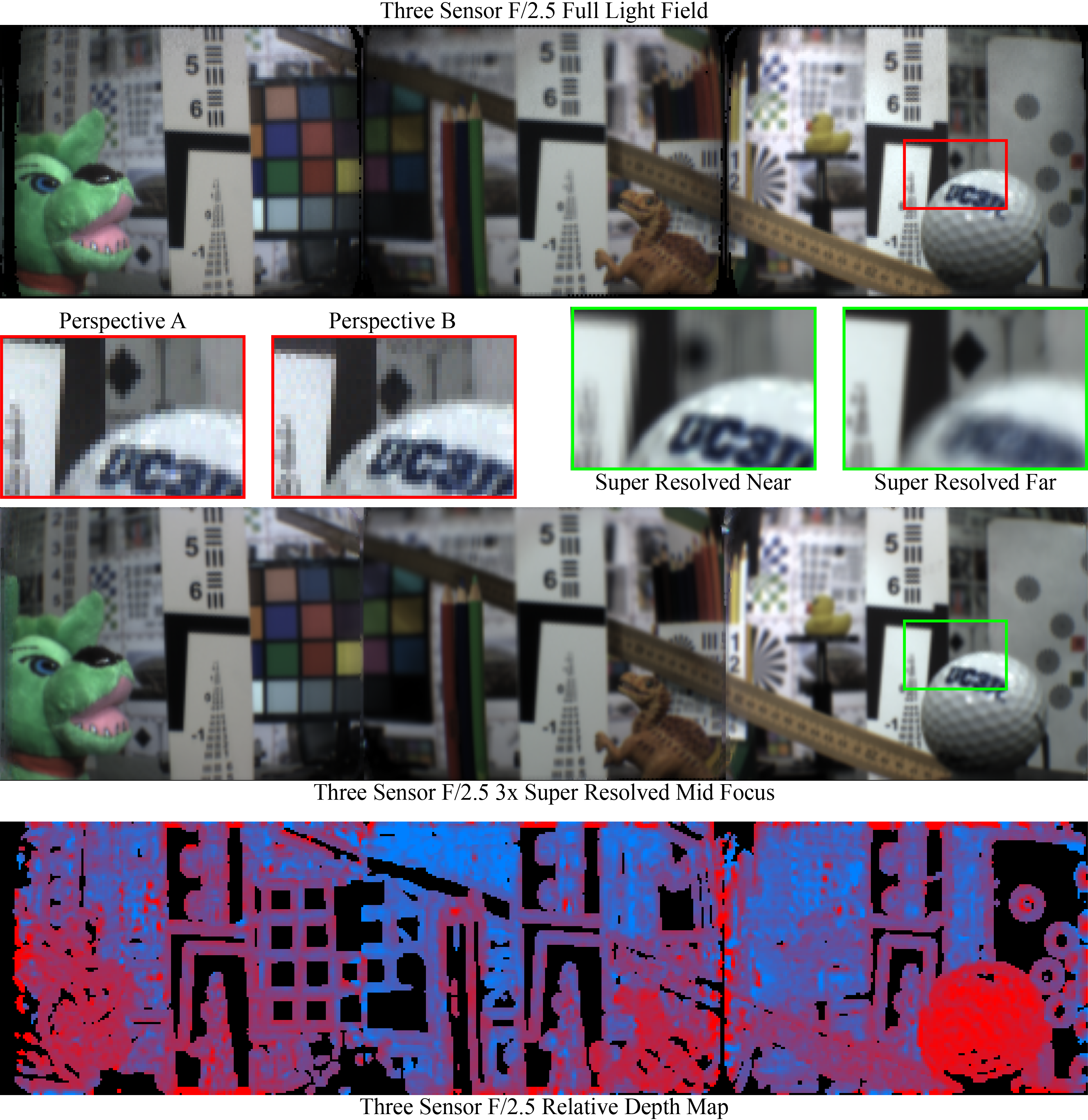

Processed LF data of the lab scene taken with the F/2.5 three sensor camera. The red region of interest from the full LF (top) shows parallax between two different vertically shifted perspectives contained within a single exposure. The green region of interest from the 3× super resolved image (middle) shows refocusing between near and far objects. The corresponding relative depth map for this image is shown at the bottom.

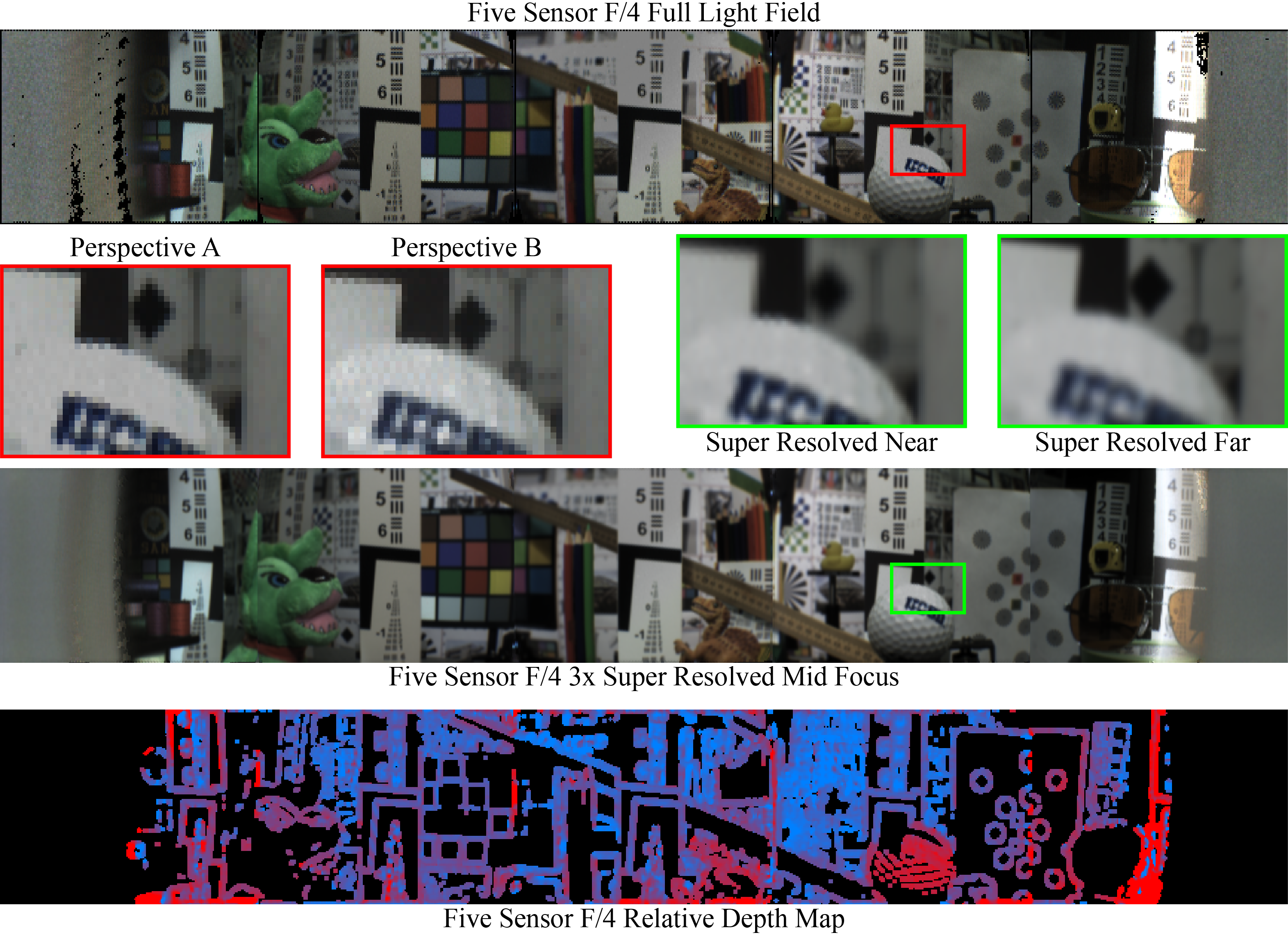

Processed LF data of the lab scene taken with the F/4 five sensor camera. The red region of interest from the full LF (top) shows parallax between two different vertically shifted perspectives contained within a single exposure. The green region of interest from the 3× super resolved image (middle) shows refocusing between near and far objects. The corresponding relative depth map for this image is shown at the bottom.

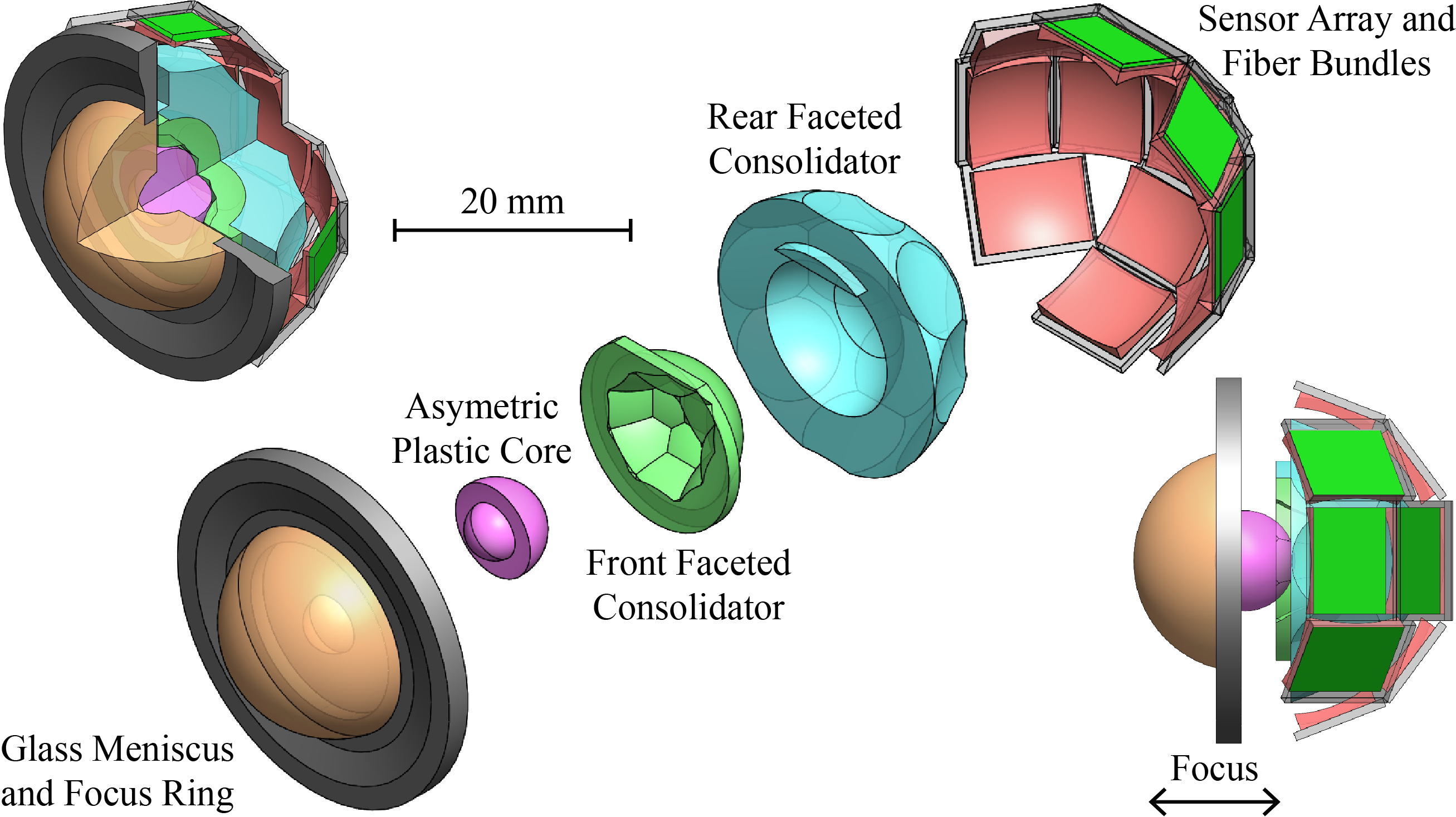

CAD renderings of the 155° full field lens design with short fiber bundle coupled image sensors.

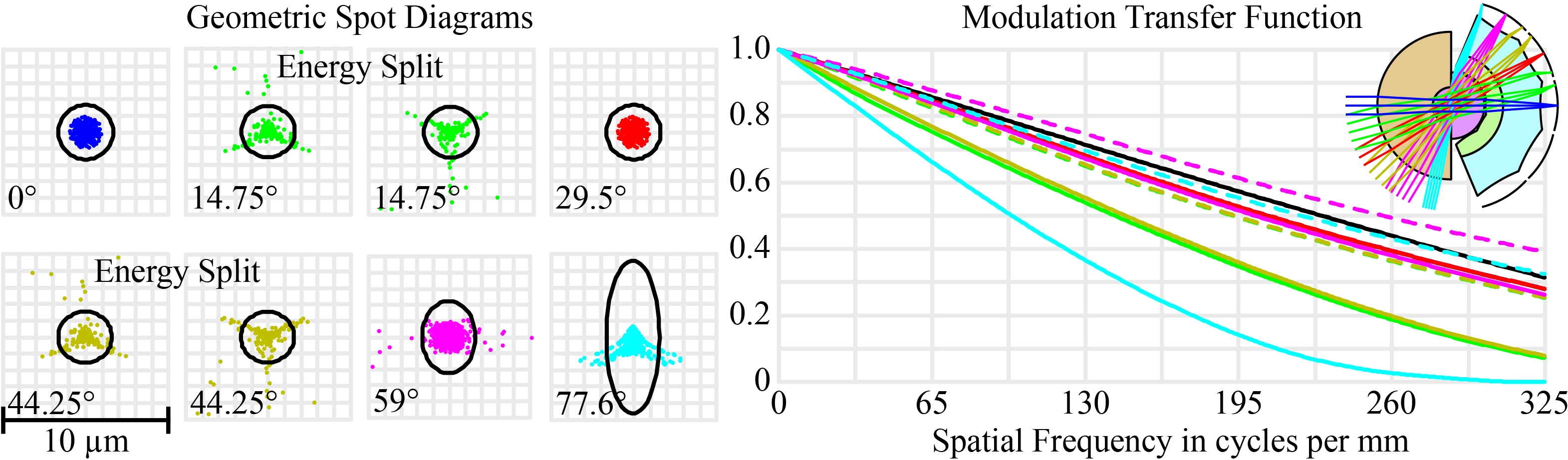

Spot diagrams (left) and MTF resolution plot (right) for the full field lens design.

JOURNAL PUBLICATIONS: